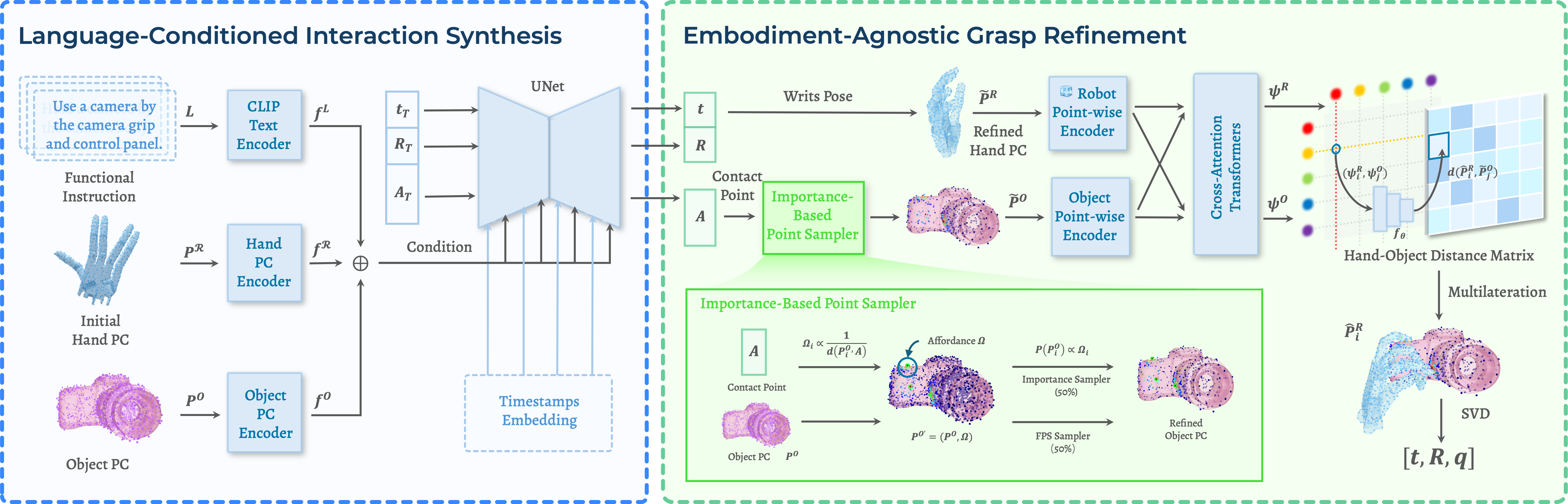

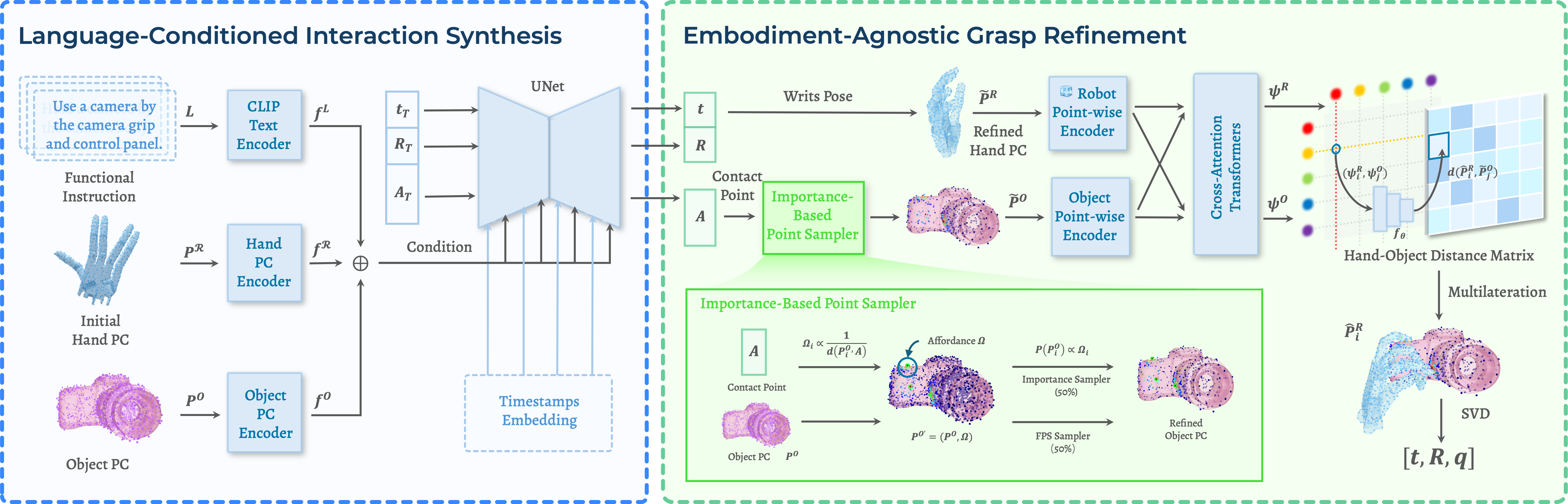

Pipeline Overview

Functional dexterous grasping is a challenging capability essential for robots to achieve intent-aligned interactions with objects. Existing methods primarily focus on grasp stability without addressing functional intent. In this work, we present Functional $\mathcal{D(R,O)}$ Grasp, a language-guided framework that enables intent-aligned grasp generation while ensuring cross-platform adaptability. We learn platform-agnostic intermediate representations that enable translation from functional grasp language input to execution across different robotic hands. This framework generates appropriate grasps for objects based on their intended use, covering multiple functional requirements (use, hold, handover, liftup). We demonstrate that our approach achieves a 75.1% success rate in simulation on unseen objects, significantly outperforming baselines. Real-world experiments with the LeapHand platform further validate our approach. Our work bridges the gap between functional intent and cross-platform dexterous execution, enabling robots to perform purposeful grasps with a single unified model.